This page contains some information on the numerical implementation of phydms, particularly in regards to how the likelihoods and its derivatives are computed.

It may be of help in trying to understand the code.

We begin by considering the basic ExpCM substitution model defined in Experimentally Informed Codon Models.

\(P_{r,xy}\) gives the substitution rate from codon \(x\) to codon \(y \ne x\) at site \(r\), and is defined by

(1)\[\begin{split}P_{r,xy} =

\begin{cases}

Q_{xy} \times F_{r,xy} & \mbox{if $x \ne y$,} \\

-\sum\limits_{z \ne x} F_{r,xz} Q_{xz} & \mbox{if $x = y$.}

\end{cases}\end{split}\]

where \(Q_{xy}\) is the rate of mutation from codon \(x\) to \(y\) and is defined by

(2)\[\begin{split}Q_{xy}

&=&

\begin{cases}

\phi_w & \mbox{if $x$ is converted to $y$ by a single-nucleotide transversion to $w$,} \\

\kappa \phi_w & \mbox{if $x$ is converted to $y$ by a single-nucleotide transition to $w$,} \\

0 & \mbox{if $x$ and $y$ differ by more than one nucleotide,} \\

\end{cases}\end{split}\]

where \(\kappa\) is the transition-transversion ratio and \(\phi_w\) is the expected frequency of nucleotide \(w\) in the absence of selection on amino-acid mutation (and so is subject to the constraint \(1 = \sum_w \phi_w\)).

The “fixation probability” \(F_{r,xy}\) of the mutation from \(x\) to \(y\) is

(3)\[\begin{split}F_{r,xy}

&=&

\begin{cases}

1 & \mbox{if $\mathcal{A}\left(x\right) = \mathcal{A}\left(y\right)$} \\

\omega & \mbox{if $\mathcal{A}\left(x\right) \ne \mathcal{A}\left(y\right)$ and $\pi_{r,\mathcal{A}\left(x\right)} = \pi_{r,\mathcal{A}\left(y\right)}$} \\

\omega \times \frac{-\beta \ln\left(\pi_{r,\mathcal{A}\left(x\right)} / \pi_{r,\mathcal{A}\left(y\right)}\right)}{1 - \left(\pi_{r,\mathcal{A}\left(x\right)} / \pi_{r,\mathcal{A}\left(y\right)}\right)^{\beta}} & \mbox{otherwise.}

\end{cases}\end{split}\]

where \(\pi_{r,a}\) is the preference of site \(r\) for amino acid \(a\), \(\operatorname{A}\left(x\right)\) is the amino acid encoded by codon \(x\), \(\beta\) is the stringency parameter, and \(\omega\) is a relative rate of nonsynonymous to synonymous mutations after accounting for the selection encapsulated by the preferences.

We define a variable transformation of the four nucleotide frequency parameters \(\phi_w\) (three of which are unique).

This transformation aids in numerical optimization.

Specifically, we number the four nucleotides in alphabetical order so that \(w = 0\) denotes A, \(w = 1\) denotes C, \(w = 2\) denotes G, and \(w = 3\) denotes T.

We then define the three free variables \(\eta_0\), \(\eta_1\), and \(\eta_2\), all of which are constrained to fall between zero and one.

For notational convenience in the formulas below, we also define \(\eta_3 = 0\); note however that \(\eta_3\) is not a free parameter, as it is always zero.

We define \(\phi_w\) in terms of these \(\eta_i\) variables by

(4)\[\phi_w = \left(\prod_{i = 0}^{w - 1} \eta_i\right) \left(1 - \eta_w\right)\]

or conversely

(5)\[\eta_w = 1 - \phi_w / \left(\prod_{i = 0}^{w - 1} \eta_i\right).\]

Note that setting \(\eta_w = \frac{3 - w}{4 - w}\) makes all of the \(\phi_w\) values equal to \(\frac{1}{4}\).

The derivatives are:

(6)\[\begin{split}\frac{\partial \phi_w}{\partial \eta_i}

& = &

\begin{cases}

\left(\prod_{j = 0}^{i - 1} \eta_j\right)\left(\prod_{j = i + 1}^{w - 1}\eta_j\right) \left(1 - \eta_w\right) = \frac{\phi_w}{\eta_i} & \mbox{if $i < w$} \\

-\prod_{j = 0}^{w - 1} \eta_j = \frac{\phi_w}{\eta_i - 1}& \mbox{if $i = w$} \\

0 & \mbox{if $i > w$} \\

\end{cases} \\

& = &

\begin{cases}

\frac{\phi_{w}}{\eta_i - \delta_{iw}} & \mbox{if $i \le w$,} \\

0 & \mbox{otherwise,}

\end{cases}\end{split}\]

where \(\delta_{ij}\) is the Kronecker-delta, equal to 1 if \(i = j\) and 0 otherwise.

Given these definitions, the free parameters in and ExpCM model are \(\kappa\), \(\eta_0\), \(\eta_1\), \(\eta_2\), \(\beta\), and \(\omega\).

Here are the derivatives of \(P_{r,xy}\) with respect to each of these parameters:

(7)\[\begin{split}\frac{\partial P_{r,xy}}{\partial \kappa}

&=&

\begin{cases}

\frac{P_{r,xy}}{\kappa} & \mbox{if $x$ is converted to $y$ by a transition of a nucleotide to $w$,} \\

0 & \mbox{if $x$ and $y$ differ by something other than a single transition,} \\

-\sum\limits_{z \ne x} \frac{\partial P_{r,xz}}{\partial \kappa} & \mbox{if $x = y$.}

\end{cases}\end{split}\]

(8)\[\begin{split}\frac{\partial P_{r,xy}}{\partial \eta_i}

=

\begin{cases}

\frac{P_{r,xy}}{\phi_w} \frac{\partial \phi_w}{\partial \eta_i} = \frac{P_{r,xy}}{\eta_i - \delta_{iw}} & \mbox{if $x$ is converted to $y$ by a single-nucleotide mutation to $w \ge i$,} \\

0 & \mbox{if $i > w$ or $x$ and $y$ differ by more than one nucleotide,} \\

-\sum\limits_{z \ne x} \frac{\partial P_{r,xz}}{\partial \eta_i} & \mbox{if $x = y$.}

\end{cases}\end{split}\]

(9)\[\begin{split}\frac{\partial P_{r,xy}}{\partial \omega} =

\begin{cases}

0 & \mbox{if $\operatorname{A}\left(x\right) = \operatorname{A}\left(y\right)$ and $x \ne y$} \\

\frac{P_{r,xy}}{\omega} & \mbox{if $\operatorname{A}\left(x\right) \ne \operatorname{A}\left(y\right)$,} \\

-\sum\limits_{z \ne x} \frac{\partial P_{r,xz}}{\partial \omega} & \mbox{if $x = y$.}

\end{cases}\end{split}\]

(10)\[\begin{split}\frac{\partial P_{r,xy}}{\partial \beta} =

\begin{cases}

0 & \mbox{if $\operatorname{A}\left(x\right) = \operatorname{A}\left(y\right)$ and $x \ne y$}, \\

0 & \mbox{if $\pi_{r,\operatorname{A}\left(x\right)} = \pi_{r,\operatorname{A}\left(y\right)}$ and $x \ne y$}, \\

\frac{P_{r,xy}}{\beta} + P_{r,xy}

\frac{\left(\pi_{r,\operatorname{A}\left(x\right)} / \pi_{r,\operatorname{A}\left(y\right)}\right)^{\beta} \times \ln\left(\pi_{r,\operatorname{A}\left(x\right)} / \pi_{r,\operatorname{A}\left(y\right)}\right)}{1 - \left(\pi_{r,\operatorname{A}\left(x\right)} / \pi_{r,\operatorname{A}\left(y\right)}\right)^{\beta}}

& \mbox{if $\operatorname{A}\left(x\right) \ne \operatorname{A}\left(y\right)$,} \\

-\sum\limits_{z \ne x} \frac{\partial P_{r,xz}}{\partial \beta} & \mbox{if $x = y$.}

\end{cases}\end{split}\]

The stationary state of the substitution model defined by \(P_{r,xy}\) is

(11)\[p_{r,x} = \frac{q_x f_{r,x}}{\sum_z q_z f_{r,z}}\]

where

(12)\[f_{r,x} = \left(\pi_{r,\operatorname{A}\left(x\right)}\right)^{\beta}\]

and

(13)\[q_x = \phi_{x_0}\phi_{x_1}\phi_{x_2}\]

where \(x_0\), \(x_1\), and \(x_2\) are the nucleotides at the first, second, and third positions of codon \(x\).

The derivatives of the stationary state with respect to \(\kappa\) and \(\omega\) are zero as these do not affect that state, so:

(14)\[\frac{\partial p_{r,x}}{\partial \kappa} = \frac{\partial p_{r,x}}{\partial \omega} = 0\]

The stationary state is sensitive to the value of \(\beta\), with derivative:

(15)\[\begin{split}\frac{\partial p_{r,x}}{\partial \beta} &=&

\frac{p_{r,x}\left[\ln\left(\pi_{r,\operatorname{A}\left(x\right)}\right)\left(\sum_z f_{r,z} q_z\right) - \sum_z \ln\left(\pi_{r,\operatorname{A}\left(z\right)}\right) f_{r,z} q_z\right]}

{\sum_z q_z f_{r,z}} \\

&=& p_{r,x} \left(\ln\left(\pi_{r,\operatorname{A}\left(x\right)}\right) - \frac{\sum_z \ln\left(\pi_{r,\operatorname{A}\left(z\right)}\right) f_{r,z} q_z}{\sum_z q_z f_{r,z}}\right) \\

&=& p_{r,x} \left(\ln\left(\pi_{r,\operatorname{A}\left(x\right)}\right) - \sum_z \ln\left(\pi_{r,\operatorname{A}\left(z\right)}\right) p_{r,z}\right)\end{split}\]

The stationary state is also sensitive to the values of \(\eta_0\), \(\eta_1\), and \(\eta_2\):

(16)\[\begin{split}\frac{\partial p_{r,x}}{\partial \eta_i} &=&

\frac{f_{r,x} \frac{\partial q_x}{\partial \eta_i}\left(\sum_z q_z f_{r,z}\right) - f_{r,x} q_x \left(\sum_z f_{r,z} \frac{\partial q_z}{\partial \eta_i} \right)}

{\left(\sum_z q_z f_{r,z}\right)^2} \\

&=& \frac{\partial q_x}{\partial \eta_i}\frac{p_{r,x}}{q_x} - p_{r,x} \frac{\sum_z f_{r,z} \frac{\partial q_z}{\partial \eta_i}}{\sum_z q_z f_{r,z}}\end{split}\]

where the \(\frac{\partial q_x}{\partial \eta_i}\) terms are:

(17)\[\begin{split}\frac{\partial q_x}{\partial \eta_i} & = &

\frac{\partial \phi_{x_0}}{\partial \eta_i} \phi_{x_1} \phi_{x_2} +

\frac{\partial \phi_{x_1}}{\partial \eta_i} \phi_{x_0} \phi_{x_2} +

\frac{\partial \phi_{x_2}}{\partial \eta_i} \phi_{x_0} \phi_{x_1} \\

& = &

\sum_{j=0}^{2} \frac{\partial \phi_{x_j}}{\partial \eta_i} \prod_{k \ne j} \phi_{x_k} \\

& = &

q_x \sum_{j=0}^{2} \frac{1}{\phi_{x_j}} \frac{\partial \phi_{x_j}}{\partial \eta_i} \\

& = &

q_x \sum_{j=0}^{2} \frac{\operatorname{bool}\left(i \le x_j \right)}{\eta_i - \delta_{ix_j}}\end{split}\]

where \(\operatorname{bool}\left(i \le j\right)\) is 1 if \(i \le j\) and 0 otherwise, and so

(18)\[\begin{split}\frac{\partial p_{r,x}}{\partial \eta_i} & = &

p_{r,x}

\left[\sum_{j=0}^{2} \frac{\operatorname{bool}\left(i \le x_j \right)}{\eta_i - \delta_{ix_j}} - \frac{\sum_z f_{r,z} q_z \sum_{j=0}^{2} \frac{\operatorname{bool}\left(i \le z_j \right)}{\eta_i - \delta_{iz_j}}}{\sum_z q_z f_{r,z}} \right] \\

& = &

p_{r,x}

\left[\sum_{j=0}^{2} \frac{\operatorname{bool}\left(i \le x_j \right)}{\eta_i - \delta_{ix_j}} - \frac{\sum_z p_{r,z} \sum_{j=0}^{2} \frac{\operatorname{bool}\left(i \le z_j \right)}{\eta_i - \delta_{iz_j}}}{\sum_z p_{r,z}} \right]

\\\end{split}\]

In the description above, the nucleotide frequencies \(\phi_w\) are fit as three free parameters.

Now let’s consider the case where we instead calculate them empirically to give a stationary state that implies nucleotide frequencies that match those empirically observed in the alignment.

This should be beneficial in terms of optimization because it reduces the number of model parameters that need to be optimized.

Let \(g_w\) be the empirical frequency of nucleotide \(w\) taken over all sites and sequences in the alignment.

Obviously, \(1 = \sum_w g_w\).

We want to empirically set \(\phi_w\) to some value \(\hat{\phi}_w\) such that when \(q_x = \hat{\phi}_{x_0} \hat{\phi}_{x_1} \hat{\phi}_{x_2}\) then

(19)\[\begin{split}g_w & = &

\frac{1}{L} \sum_r \sum_x \frac{1}{3} N_w\left(x\right) p_{r,x} \\

& = &

\frac{1}{3L} \sum_r \frac{\sum_x N_w\left(x\right) f_{r,x} \prod_{k=0}^2 \hat{\phi}_{x_k}}{\sum_y f_{r,y} \prod_{k=0}^2 \hat{\phi}_{y_k}} \\\end{split}\]

where \(N_w\left(x\right) = \sum_{k=0}^2 \delta_{x_k, w}\) is the number of occurrence of nucleotide \(w\) in codon \(x\), \(r\) ranges over all codon sites in the gene, \(x\) ranges over all codons, and \(k\) ranges over the first three nucleotides.

There are three independent \(g_w\) values and three independent \(\hat{\phi}_w\) values (since \(1 = \sum_w g_w = \sum_w \hat{\phi}_w\)), so we have three equations and three unknowns.

We could not solve the set of three equations analytically for the \(\hat{\phi}_w\) values, so instead we use a non-linear equation solver to determine their values.

When using empirical nucleotide frequencies, we no longer need to calculate any derivatives with respect to \(\eta_i\) as we no longer have the \(\eta_i\) free parameters.

However, now the value of \(\phi_w = \hat{\phi}_w\) depends on \(\beta\) via the \(f_{r,x}\) parameters in Equation (19).

So we need new formulas for \(\frac{\partial p_{r,x}}{\partial \beta}\) and \(\frac{\partial P_{r,xy}}{\partial \beta}\) that accounts for this dependency.

Since we do not have an analytic expression for \(\hat{\phi}_w\), we cannot compute \(\frac{\partial \phi_w}{\partial \beta}\) analytically.

But we can compute these derivatives numerically.

This is done using a finite-difference method.

We now update the formula in Equation (10) for the case when \(\phi_w\) depends on \(\beta\). In that case, we have:

(20)\[\begin{split}\frac{\partial Q_{xy}}{\partial \beta} =

\begin{cases}

\frac{\partial \phi_w}{\partial \beta} & \mbox{if $x$ is converted to $y$ by a single-nucleotide transversion to $w$,} \\

\kappa \frac{\partial \phi_w}{\partial \beta} & \mbox{if $x$ is converted to $y$ by a single-nucleotide transition to $w$,} \\

0 & \mbox{if $x$ and $y$ differ by more than one nucleotide,} \\

\end{cases}\end{split}\]

and

(21)\[\begin{split}\frac{\partial F_{r,xy}}{\partial \beta}

&=&

\begin{cases}

0 & \mbox{if $\mathcal{A}\left(x\right) = \mathcal{A}\left(y\right)$} \\

0 & \mbox{if $\mathcal{A}\left(x\right) \ne \mathcal{A}\left(y\right)$ and $\pi_{r,\mathcal{A}\left(x\right)} = \pi_{r,\mathcal{A}\left(y\right)}$} \\

\frac{F_{r,xy} \left(1 - \frac{F_{r,xy}}{\omega} \left(\pi_{r,\operatorname{A}\left(x\right)} / \pi_{r,\operatorname{A}\left(y\right)}\right)^{\beta}\right)}{\beta}

& \mbox{otherwise,}

\end{cases}\end{split}\]

so for all \(x \ne y\), we have

(22)\[\begin{split}\frac{\partial P_{r,xy}}{\partial \beta}

& = &

\frac{\partial \left(Q_{xy} \times F_{r,xy}\right)}{\partial \beta} \\

& = &

Q_{xy} \frac{\partial F_{r,xy}}{\partial \beta} + F_{r,xy} \frac{\partial Q_{xy}}{\partial \beta} \\

& = &

\left[\frac{\partial P_{r,xy}}{\partial \beta}\right]_{\mbox{free } \phi_w} + F_{r,xy} \frac{\partial Q_{xy}}{\partial \beta}.\end{split}\]

where \(\left[\frac{\partial P_{r,xy}}{\partial \beta}\right]_{\mbox{free } \phi_w}\) is the expression given by Equation (10).

When \(x = y\), we have \(\frac{\partial P_{r,xx}}{\partial \beta} = \sum\limits_{z \ne x} -\frac{\partial P_{r,xz}}{\partial \beta}\).

We also must update the formula in Equation (15) for the case where \(\phi_w\) depends on \(\beta\).

We have:

(23)\[\begin{split}\frac{\partial q_x}{\partial \beta}

& = &

\frac{\partial \left(\phi_{x_0} \phi_{x_1} \phi_{x_2}\right)}{\partial \beta} \\

& = &

\sum\limits_{j=0}^2 \frac{\partial \phi_{x_j}}{\partial \beta} \prod_{k \ne j} \phi_{x_k} \\

& = &

q_x \sum\limits_{j=0}^2 \frac{1}{\phi_{x_j}} \frac{\partial \phi_{x_j}}{\partial \beta}\end{split}\]

and

(24)\[\frac{\partial f_{r,x}}{\partial \beta}

=

f_{r,x} \left[\ln\left(\pi_{r,\operatorname{A}\left(x\right)}\right)\right].\]

So:

(25)\[\begin{split}\frac{\partial p_{r,x}}{\partial \beta}

& = &

\frac{\partial}{\partial \beta} \left(\frac{q_x f_{r,x}}{\sum_z q_z f_{r,z}}\right) \\

& = &

\frac{\left(q_x \frac{\partial f_{r,x}}{\partial \beta} + f_{r,x} \frac{\partial q_x}{\partial \beta}\right) \sum_z q_z f_{r,z} - q_x f_{r,x} \sum_z \left(q_z \frac{\partial f_{r,z}}{\partial \beta} + f_{r,z} \frac{\partial q_z}{\partial \beta}\right)}{\left(\sum_z q_z f_{r,z}\right)^2} \\

& = &

\frac{\left(q_x f_{r,x} \left[\ln\left(\pi_{r,\operatorname{A}\left(x\right)}\right)\right] + f_{r,x} q_x \sum\limits_{j=0}^2 \frac{1}{\phi_{x_j}} \frac{\partial \phi_{x_j}}{\partial \beta}\right) \sum_z q_z f_{r,z} - q_x f_{r,x} \sum_z \left(q_z f_{r,z} \left[\ln\left(\pi_{r,\operatorname{A}\left(z\right)}\right)\right] + f_{r,z} q_z \sum\limits_{j=0}^2 \frac{1}{\phi_{z_j}} \frac{\partial \phi_{z_j}}{\partial \beta}\right)}{\left(\sum_z q_z f_{r,z}\right)^2} \\

& = &

p_{r,x} \frac{\left(\left[\ln\left(\pi_{r,\operatorname{A}\left(x\right)}\right)\right] + \sum\limits_{j=0}^2 \frac{1}{\phi_{x_j}} \frac{\partial \phi_{x_j}}{\partial \beta}\right) \sum_z q_z f_{r,z} - \sum_z \left(q_z f_{r,z} \left[\ln\left(\pi_{r,\operatorname{A}\left(z\right)}\right)\right] + f_{r,z} q_z \sum\limits_{j=0}^2 \frac{1}{\phi_{z_j}} \frac{\partial \phi_{z_j}}{\partial \beta}\right)}{\sum_z q_z f_{r,z}} \\

& = &

p_{r,x} \left[\left[\ln\left(\pi_{r,\operatorname{A}\left(x\right)}\right)\right] + \sum\limits_{j=0}^2 \frac{1}{\phi_{x_j}} \frac{\partial \phi_{x_j}}{\partial \beta} - \sum_z p_{r,z}\left(\left[\ln\left(\pi_{r,\operatorname{A}\left(z\right)}\right)\right] + \sum\limits_{j=0}^2 \frac{1}{\phi_{z_j}} \frac{\partial \phi_{z_j}}{\partial \beta}\right)\right] \\

& = &

\left[\frac{\partial p_{r,x}}{\partial \beta}\right]_{\mbox{free } \phi_w} +

p_{r,x} \left[\sum\limits_{j=0}^2 \frac{1}{\phi_{x_j}} \frac{\partial \phi_{x_j}}{\partial \beta} - \sum_z p_{r,z}\sum\limits_{j=0}^2 \frac{1}{\phi_{z_j}} \frac{\partial \phi_{z_j}}{\partial \beta}\right]\end{split}\]

where \(\left[\frac{\partial p_{r,x}}{\partial \beta}\right]_{\mbox{free } \phi_w}\) is the expresssion given by Equation (15).

The \(\omega\) value in the previous models is the gene-wide relative rate of nonsynonymous to synonymous mutations after accounting for the differing preferences among sites.

In some cases, it might be possible to specify a priori expections for the diversifying pressure at each site.

For instance, viruses benefit from amino-acid change in sites targeted by the immune system and, consequently, these sites have a higher rate of amino-acid substitution than expected given their level of inherent functional constraint.

We can incorporate our expectations for diversifying pressure at specific sites into the selection terms \(F_{r,xy}\).

Let \(\delta_{r}\) be the pre-determined diversifying pressure for amino-acid change at site \(r\) in the protein. A large positive value of \(\delta_r\) corresponds to high pressure for amino-acid diversification, and negative value corresponds to expected pressure against amino-acid diversification beyond that captured in the amino-acid preferences. We then replace \(\omega\) in Equation (3) with the expression \(\omega\times\left(1+\omega_{2}\times\delta_{r}\right)\), resulting in selection terms:

(26)\[\begin{split}F_{r,xy} =

\begin{cases}

1 & \mbox{if $\mathcal{A}\left(x\right) = \mathcal{A}\left(y\right)$} \\

\omega\times\left(1+\omega_{2}\times\delta_{r}\right) & \mbox{if $\mathcal{A}\left(x\right) \ne \mathcal{A}\left(y\right)$ and $\pi_{r,\mathcal{A}\left(x\right)} = \pi_{r,\mathcal{A}\left(y\right)}$} \\

\omega\times\left(1+\omega_{2}\times\delta_{r}\right) \times \frac{\ln\left(\left(\pi_{r,\mathcal{A}\left(y\right)}\right)^{\beta} / \left(\pi_{r,\mathcal{A}\left(x\right)}\right)^{\beta}\right)}{1 - \left(\left(\pi_{r,\mathcal{A}\left(x\right)}\right)^{\beta} / \left(\pi_{r,\mathcal{A}\left(y\right)}\right)^{\beta}\right)} & \mbox{otherwise.}

\end{cases}\end{split}\]

Whereas before \(\omega\) reflected the elevation of non-synonymous substitution rate (averaged across the entire gene) beyond that expected given the amino-acid preferences, now \(\omega\) reflects a gene-wide rate of elevated non-synonymous substitution after taking into account the expected sites of diversifying pressure (as represented by \(\delta_r\)) weighted by \(\omega_{2}\times\delta_{r}\). These new selection terms in equation Equation (26) are identical the selection terms in Equation (3) when \(\omega_{2} = 0\).

To ensure a positive value of \(\omega\times\left(1+\omega_{2}\times\delta_{r}\right)\), we constrain \(\omega>0\), \(-1<\omega_2<\infty\), and \(\lvert\max_r\delta_r\rvert\le1\).

We have added one more parameter to Equation (1), \(\omega_2\), so we need to add a new derivative, \(\frac{\partial P_{r,xy}}{\partial \omega_2}\) (27).

(27)\[\begin{split}\frac{\partial P_{r,xy}}{\partial \omega_2} =

\begin{cases}

0 & \mbox{if $\operatorname{A}\left(x\right) = \operatorname{A}\left(y\right)$ and $x \ne y$} \\

\omega \times \delta_r \times \frac{\ln\left(\left(\pi_{r,\mathcal{A}\left(y\right)}\right)^{\beta} / \left(\pi_{r,\mathcal{A}\left(x\right)}\right)^{\beta}\right)}{1 - \left(\left(\pi_{r,\mathcal{A}\left(x\right)}\right)^{\beta} / \left(\pi_{r,\mathcal{A}\left(y\right)}\right)^{\beta}\right)} \times Q_{xy} & \mbox{if $\operatorname{A}\left(x\right) \ne \operatorname{A}\left(y\right)$,} \\

-\sum\limits_{z \ne x} \frac{\partial P_{r,xy}}{\partial \omega_2} & \mbox{if $x = y$.}

\end{cases}.\end{split}\]

In most situations, the amino-acid preferences \(\pi_{r,a}\) are experimentally measured.

But in certain situations, we wish to treat these as free parameters that we optimize by maximum likelihood.

There are two different implementations of how this is done, instantiated in the ExpCM_fitprefs and ExpCM_fitprefs2 classes.

These classes differ in how the preferences are represented as parameters, and so may have different optimization efficiencies.

First, we describe aspects general to both implementations, then we describe the details specific to each.

The \(F_{r,xy}\) terms defined by Equation (3) depend on \(\pi_{r,a}\).

The derivative is

(28)\[\begin{split}\frac{\partial F_{r,xy}}{\partial \pi_{r,a}} &=&

\begin{cases}

\left(\delta_{a\mathcal{A}\left(y\right)} - \delta_{a\mathcal{A}\left(x\right)} \right) \frac{\omega \beta}{2 \pi_{r,a}} & \mbox{if $\pi_{r, \mathcal{A}\left(x\right)} = \pi_{r,\mathcal{A}\left(y\right)}$}, \\

\left(\delta_{a\mathcal{A}\left(y\right)} - \delta_{a\mathcal{A}\left(x\right)} \right) \frac{\omega \beta}{\pi_{r,a}} \frac{\left(\pi_{r,\mathcal{A}\left(x\right)} / \pi_{r,\mathcal{A}\left(y\right)}\right)^{\beta}\left[\ln\left(\left(\frac{\pi_{r,\mathcal{A}\left(x\right)}}{\pi_{r,\mathcal{A}\left(y\right)}}\right)^{\beta}\right) - 1\right] + 1}{\left(1 - \left(\frac{\pi_{r,\mathcal{A}\left(x\right)}}{\pi_{r,\mathcal{A}\left(y\right)}}\right)^{\beta}\right)^2} & \mbox{if $\pi_{r, \mathcal{A}\left(x\right)} \ne \pi_{r,\mathcal{A}\left(y\right)}$}, \\

\end{cases}\end{split}\]

where the expressions when \(\pi_{r,\mathcal{A}\left(x\right)} = \pi_{r,\mathcal{A}\left(y\right)}\) are derived from application of L’Hopital’s rule, and \(\delta_{ij}\) is the Kronecker delta.

Define

\[\begin{split}\tilde{F}_{r,xy} =

\begin{cases}

0 & \mbox{if $\mathcal{A}\left(x\right) = \mathcal{A}\left(y\right)$,} \\

\frac{\omega \beta}{2} & \mbox{if $\mathcal{A}\left(x\right) \ne \mathcal{A}\left(y\right)$ and $\pi_{r, \mathcal{A}\left(x\right)} = \pi_{r,\mathcal{A}\left(y\right)}$,} \\

\left(\omega \beta\right) \frac{\left(\pi_{r,\mathcal{A}\left(x\right)} / \pi_{r,\mathcal{A}\left(y\right)}\right)^{\beta}\left[\ln\left(\left(\frac{\pi_{r,\mathcal{A}\left(x\right)}}{\pi_{r,\mathcal{A}\left(y\right)}}\right)^{\beta}\right) - 1\right] + 1}{\left(1 - \left(\frac{\pi_{r,\mathcal{A}\left(x\right)}}{\pi_{r,\mathcal{A}\left(y\right)}}\right)^{\beta}\right)^2} & \mbox{if $\mathcal{A}\left(x\right) \ne \mathcal{A}\left(y\right)$ and $\pi_{r, \mathcal{A}\left(x\right)} \ne \pi_{r,\mathcal{A}\left(y\right)}$,}

\end{cases}\end{split}\]

so that

\[\frac{\partial F_{r,xy}}{\partial \pi_{r,a}} = \left(\delta_{a\mathcal{A}\left(y\right)} - \delta_{a\mathcal{A}\left(x\right)} \right) \frac{\tilde{F}_{r,xy}}{\pi_{r,a}}.\]

We also need to calculate the derivative of the stationary state \(p_{r,x}\) given by Equation (11) with respect to the preference. In this calculation, we simplify the algebra by taking advantage of the fact that for our fit preferences models, we always have \(\beta = 1\) to simplify from the first to the second line below:

(29)\[\begin{split}\frac{\partial p_{r,x}}{\partial \pi_{r,a}} &=&

\frac{\partial}{\partial \pi_{r,x}}\left(\frac{q_x \left(\pi_{r,\mathcal{A}\left(x\right)}\right)^{\beta}}{\sum_z q_z \left(\pi_{r,\mathcal{A}\left(z\right)}\right)^{\beta}}\right) \\

&=&

\frac{\partial}{\partial \pi_{r,x}}\left(\frac{q_x \pi_{r,\mathcal{A}\left(x\right)}}{\sum_z q_z \pi_{r,\mathcal{A}\left(z\right)}}\right) \\

&=&

\frac{q_x \delta_{a\mathcal{A}\left(x\right)}\left( \sum_z q_z \pi_{r,\mathcal{A}\left(z\right)}\right) - q_x \pi_{r,\mathcal{A}\left(x\right)} \times \sum_z q_z \delta_{a\mathcal{A}\left(z\right)} }{\left( \sum_z q_z \pi_{r,\mathcal{A}\left(z\right)} \right)^2} \\

&=&

\delta_{a\mathcal{A}\left(x\right)} \frac{p_{r,x}}{\pi_{r,a}} - p_{r,x} \sum_z\delta_{a\mathcal{A}\left(z\right)} \frac{p_{r,z}}{\pi_{r,a}}.\end{split}\]

ExpCM_fitprefs implementation

We define a variable transformation from the 20 \(\pi_{r,a}\) values at each site \(r\) (19 of these 20 values are unique since they sum to one).

This transformation is analogous to that in Equations (4) and (5).

Specifically, we number the 20 amino acids such that \(a = 0\) means alanine, \(a = 1\) means cysteine, and so on up to \(a = 19\) meaning tyrosine..

We then define 19 free variables for each site \(r\): \(\zeta_{r,0}, \zeta_{r,1}, \ldots, \zeta_{r,18}\), all of which are constrained to value between zero and one.

For notational convenience, we also define \(\zeta_{r,19} = 0\), but not that \(\zeta_{r,19}\) is not a free parameter as it is always zero.

We the define

(30)\[\pi_{r,a} = \left(\prod\limits_{i=0}^{a - 1} \zeta_{r,i}\right) \left(1 - \zeta_{r,a}\right)\]

and conversely

(31)\[\zeta_{r,a} = 1 - \pi_{r,a} / \left(\prod\limits_{i=0}^{a - 1} \zeta_{r,i} \right).\]

Note that setting \(\zeta_{r,a} = \frac{19 - a}{20 - a}\) makes all the \(\pi_{r,a}\) values equal to \(\frac{1}{20}\).

In analogy with Equation (6), we have

(32)\[\begin{split}\frac{\partial \pi_{r,a}}{\partial \zeta_{r,i}} =

\begin{cases}

\frac{\pi_{r,a}}{\zeta_{r,i} - \delta_{ia}} & \mbox{if $i \le a$,} \\

0 & \mbox{otherwise,}

\end{cases}\end{split}\]

where \(\delta_{ij}\) is the Kronecker-delta.

We then have

(33)\[\begin{split}\frac{\partial P_{r,xy}}{\partial \zeta_{r,i}} =

Q_{xy} \sum\limits_a \frac{\partial F_{r,xy}}{\partial \pi_{r,a}} \frac{\partial \pi_{r,a}}{\partial \zeta_{r,i}} =

\begin{cases}

0 & \mbox{if $i > \mathcal{A}\left(x\right)$ and $i > \mathcal{A}\left(y\right)$ and $x \ne y$,} \\

\frac{Q_{xy} \tilde{F}_{r,xy}}{\zeta_{r,i} - \delta_{i\mathcal{A}\left(y\right)}} & \mbox{if $i > \mathcal{A}\left(x\right)$ and $i \le \mathcal{A}\left(y\right)$ and $x \ne y$,} \\

-\frac{Q_{xy} \tilde{F}_{r,xy}}{\zeta_{r,i} - \delta_{i\mathcal{A}\left(x\right)}} & \mbox{if $i \le \mathcal{A}\left(x\right)$ and $i > \mathcal{A}\left(y\right)$ and $x \ne y$,} \\

\frac{Q_{xy} \tilde{F}_{r,xy}}{\zeta_{r,i} - \delta_{i\mathcal{A}\left(y\right)}} - \frac{Q_{xy} \tilde{F}_{r,xy}}{\zeta_{r,i} - \delta_{i\mathcal{A}\left(x\right)}} & \mbox{if $i \le \mathcal{A}\left(x\right)$ and $i \le \mathcal{A}\left(y\right)$ and $x \ne y$} \\

-\sum\limits_{z \ne x} \frac{\partial P_{r,xy}}{\partial \zeta_{r,i}} & \mbox{if $x = y$}.

\end{cases}\end{split}\]

We also have:

(34)\[\begin{split}\frac{\partial p_{r,x}}{\partial \zeta_{r,i}} &=&

\sum\limits_a \frac{\partial p_{r,x}}{\partial \pi_{r,a}} \frac{\partial \pi_{r,a}}{\partial \zeta_{r,i}} \\

&=& p_{r,x} \sum\limits_{a \ge i} \frac{1}{\zeta_{r,i} - \delta_{ia}} \left(\delta_{a\mathcal{A}\left(x\right)} - \sum_z\delta_{a\mathcal{A}\left(z\right)} p_{r,z} \right).\end{split}\]

ExpCM_prefs2 implementation

For this implementation, we define a different variable transformation from the 20 \(\pi_{r,a}\) values at each site \(r\) (19 of these 20 values are unique since they sum to one).

We define 19 free variables for each site \(r\): \(\zeta_{r,0}, \zeta_{r,1}, \ldots, \zeta_{r,18}\), all of which are constrained to be greater than zero.

For notational convenience, we also define \(\zeta_{r,19} = 1\), but not that \(\zeta_{r,19}\) is not a free parameter as it is always one.

We then define

(35)\[\pi_{r,a} = \frac{\zeta_{r,a}}{\sum_{j} \zeta_{r,j}}\]

and conversely

(36)\[\zeta_{r,a} = \frac{\pi_{r,a}}{\pi_{r,19}}.\]

We therefore have

(37)\[\begin{split}\frac{\partial \pi_{r,a}}{\partial \zeta_{r,i}} &=&

\frac{1}{\sum_{j} \zeta_{r,j}} \left(\delta_{ia} - \frac{\zeta_{r,a}}{\sum_{j} \zeta_{r,j}}\right) \\

&=& \frac{\pi_{r,a}}{\zeta_{r,a}} \left(\delta_{ia} - \pi_{r,a}\right)\end{split}\]

where \(\delta_{ij}\) is the Kronecker-delta.

We then have

(38)\[\begin{split}\frac{\partial P_{r,xy}}{\partial \zeta_{r,i}} &=&

Q_{xy} \sum\limits_a \frac{\partial F_{r,xy}}{\partial \pi_{r,a}} \frac{\partial \pi_{r,a}}{\partial \zeta_{r,i}} \\

&=& Q_{xy} \tilde{F}_{r,xy} \sum\limits_a \left(\delta_{a\mathcal{A}\left(y\right)} - \delta_{a\mathcal{A}\left(x\right)} \right) \frac{1}{\zeta_{r,a}} \left(\delta_{ia} - \pi_{r,a}\right) \\

&=& Q_{xy} \tilde{F}_{r,xy} \left[\left(\sum\limits_a \left(\delta_{a\mathcal{A}\left(y\right)} - \delta_{a\mathcal{A}\left(x\right)} \right) \frac{\delta_{ia}}{\zeta_{r,a}}\right) - \left(\sum\limits_a \left(\delta_{a\mathcal{A}\left(y\right)} - \delta_{a\mathcal{A}\left(x\right)} \right) \frac{\pi_{r,a}}{\zeta_{r,a}}\right)\right] \\

&=& Q_{xy} \tilde{F}_{r,xy} \left[\frac{\delta_{i\mathcal{A}\left(y\right)} - \delta_{i\mathcal{A}\left(x\right)}}{\zeta_{r,i}} - \left(\sum\limits_a \left(\delta_{a\mathcal{A}\left(y\right)} - \delta_{a\mathcal{A}\left(x\right)} \right) \frac{\pi_{r,a}}{\zeta_{r,a}}\right)\right] \\

&=& Q_{xy} \tilde{F}_{r,xy} \left[\frac{\delta_{i\mathcal{A}\left(y\right)} - \delta_{i\mathcal{A}\left(x\right)}}{\zeta_{r,i}} - \left(\frac{1}{\sum_j \zeta_{r,j}}\sum\limits_a \left(\delta_{a\mathcal{A}\left(y\right)} - \delta_{a\mathcal{A}\left(x\right)} \right) \right)\right] \\

&=& Q_{xy} \tilde{F}_{r,xy} \left[\frac{\delta_{i\mathcal{A}\left(y\right)} - \delta_{i\mathcal{A}\left(x\right)}}{\zeta_{r,i}}\right].\end{split}\]

We also have:

(39)\[\begin{split}\frac{\partial p_{r,x}}{\partial \zeta_{r,i}} &=&

\sum\limits_a \frac{\partial p_{r,x}}{\partial \pi_{r,a}} \frac{\partial \pi_{r,a}}{\partial \zeta_{r,i}} \\

&=& p_{r,x} \sum\limits_{a} \frac{\delta_{ia} - \pi_{r,a}}{\zeta_{r,a}} \left(\delta_{a\mathcal{A}\left(x\right)} - \sum_z\delta_{a\mathcal{A}\left(z\right)} p_{r,z} \right) \\

&=& p_{r,x} \left[\frac{1}{\zeta_{r,i}} \left(\delta_{i\mathcal{A}\left(x\right)} - \sum_z\delta_{i\mathcal{A}\left(z\right)} p_{r,z} \right) - \sum\limits_{a} \frac{\pi_{r,a}}{\zeta_{r,a}} \left(\delta_{a\mathcal{A}\left(x\right)} - \sum_z\delta_{a\mathcal{A}\left(z\right)} p_{r,z} \right)\right] \\

&=& p_{r,x} \left[\frac{1}{\zeta_{r,i}} \left(\delta_{i\mathcal{A}\left(x\right)} - \sum_z\delta_{i\mathcal{A}\left(z\right)} p_{r,z} \right) - \frac{\pi_{r,\mathcal{A}\left(x\right)}}{\zeta_{r,\mathcal{A}\left(x\right)}} + \sum\limits_{a} \frac{\pi_{r,a}}{\zeta_{r,a}} \sum_z\delta_{a\mathcal{A}\left(z\right)} p_{r,z}\right] \\

&=& p_{r,x} \left[\frac{1}{\zeta_{r,i}} \left(\delta_{i\mathcal{A}\left(x\right)} - \sum_z\delta_{i\mathcal{A}\left(z\right)} p_{r,z} \right) - \frac{1}{\sum_j \zeta_{r,j}} + \frac{1}{\sum_j \zeta_{r,j}} \sum\limits_{a} \sum_z\delta_{a\mathcal{A}\left(z\right)} p_{r,z}\right] \\

&=& \frac{p_{r,x}}{\zeta_{r,i}} \left(\delta_{i\mathcal{A}\left(x\right)} - \sum_z\delta_{i\mathcal{A}\left(z\right)} p_{r,z} \right).\end{split}\]

When the preferences are free parameters, we typically want to regularize them to avoid fitting lots of values of one or zero.

We do this by defining a regularizing prior over the preferences, and then maximizing the product of the likelihood and this regularizing prior (essentially, the maximum a posteriori estimate).

Inverse-quadratic prior

This is the prior used in Bloom, Biology Direct, 12:1 (note that the notation used here is slightly different than in that reference).

Let \(\pi_{r,a}\) be the preference that we are trying to optimize, and let \(\theta_{r,a}\) be our prior estimate of \(\pi_{r,a}\).

Typically, this estimate is the original experimentally measured preference \(\pi_{r,a}^{\rm{orig}}\) re-scaled by the optimized stringency parameter \(\beta\), namely \(\theta_{r,a} = \frac{\left(\pi_{r,a}^{\rm{orig}}\right)^{\beta}}{\sum_{a'} \left(\pi_{r,a'}^{\rm{orig}}\right)^{\beta}}\).

The prior is then

\[\Pr\left(\left\{\pi_{r,a}\right\} \mid \left\{\theta_{r,a}\right\}\right) =

\prod\limits_r \prod\limits_a \left(\frac{1}{1 + C_1 \times \left(\pi_{r,a} - \theta_{r,a}\right)^2}\right)^{C_2}.\]

or

\[\ln\left[ \Pr\left(\left\{\pi_{r,a}\right\} \mid \left\{\theta_{r,a}\right\}\right) \right] = -C_2 \sum\limits_r \sum\limits_a \ln\left(1 + C_1 \times \left(\pi_{r,a} - \theta_{r,a}\right)^2 \right)\]

where \(C_1\) and \(C_2\) are parameters that specify how concentrated the prior is (larger values make the prior more strongly peaked at \(\theta_{r,a}\)).

The derivative is

\[\frac{\partial \ln\left[ \Pr\left(\left\{\pi_{r,a}\right\} \mid \left\{\theta_{r,a}\right\}\right) \right]}{\partial \pi_{r,a}} =

\frac{-2 C_1 C_2 \left(\pi_{r,a} - \theta_{r,a}\right)}{1 + C_1 \times \left(\pi_{r,a} - \theta_{r,a}\right)^2},\]

This prior can then be defined in terms of the transformation variable for the ExpCM_fitprefs or ExpCM_fitprefs2 implementation:

ExpCM_fitprefs implementation

\[\begin{split}\frac{\partial \ln\left[ \Pr\left(\left\{\pi_{r,a}\right\} \mid \left\{\theta_{r,a}\right\}\right) \right]}{\partial \zeta_{r,i}}

&=& \sum\limits_a \frac{\partial \ln\left[ \Pr\left(\left\{\pi_{r,a}\right\} \mid \left\{\theta_{r,a}\right\}\right) \right]}{\partial \pi_{r,a}} \frac{\partial \pi_{r,a}}{\partial \zeta_{r,i}} \\

&=& -2 C_1 C_2 \sum\limits_{a \ge i} \frac{\left(\pi_{r,a} - \theta_{r,a}\right)}{1 + C_1 \times \left(\pi_{r,a} - \theta_{r,a}\right)^2 } \frac{\pi_{r,a}}{\zeta_{r,i} - \delta_ia}.\end{split}\]

ExpCM_fitprefs2 implementation

\[\begin{split}\frac{\partial \ln\left[ \Pr\left(\left\{\pi_{r,a}\right\} \mid \left\{\theta_{r,a}\right\}\right) \right]}{\partial \zeta_{r,i}}

&=& \sum\limits_a \frac{\partial \ln\left[ \Pr\left(\left\{\pi_{r,a}\right\} \mid \left\{\theta_{r,a}\right\}\right) \right]}{\partial \pi_{r,a}} \frac{\partial \pi_{r,a}}{\partial \zeta_{r,i}} \\

&=& -2 C_1 C_2 \sum\limits_{a} \frac{\left(\pi_{r,a} - \theta_{r,a}\right)}{1 + C_1 \times \left(\pi_{r,a} - \theta_{r,a}\right)^2 } \frac{\pi_{r,a}}{\zeta_{r,a}}\left(\delta_{ia} - \pi_{r,a}\right) \\

&=& \frac{-2 C_1 C_2}{\sum_j\zeta_{r,j}} \sum\limits_{a} \frac{\left(\pi_{r,a} - \theta_{r,a}\right)}{1 + C_1 \times \left(\pi_{r,a} - \theta_{r,a}\right)^2 } \left(\delta_{ia} - \pi_{r,a}\right).\end{split}\]

We consider the basic Goldman-Yang style YNGKP_M0 substitution model defined in Yang, Nielsen, Goldman, and Krabbe Pederson, Genetics, 155:431-449.

This model is not site-specific.

\(P_{xy}\) is the substitution rate from codon x to codon y and is defined by

(40)\[\begin{split}P_{xy} =

\begin{cases}

0 & \mbox{if $x$ and $y$ differ by more than one nucleotide,}\\

\mu \omega \Phi_{y} & \mbox{if $x$ is converted to $y$ by a single-nucleotide transversion,} \\

\kappa \mu \omega \Phi_{y} & \mbox{if $x$ is converted to $y$ by a single-nucleotide transition,} \\

-\sum\limits_{z \ne x} P_{xz} & \mbox{if $x = y$.}

\end{cases}\end{split}\]

where \(\kappa\) is the transition-transversion ratio, \(\Phi_y\) is the equilibrium frequency of

codon \(y\), \(\omega\) is the gene-wide rate of non-synonymous change, and \(\mu\) is the substitution rate.

Typically \(\Phi_y\) is determined empirically as described below,

and \(\kappa\) and \(\omega\) are optimized by maximum likelihood.

The derivatives are:

(41)\[\begin{split}\frac{\partial P_{xy}}{\partial \kappa}

&=&

\begin{cases}

\frac{P_{xy}}{\kappa} & \mbox{if $x$ is converted to $y$ by a transition of a nucleotide to $w$,} \\

0 & \mbox{if $x$ and $y$ differ by something other than a single transition,} \\

-\sum\limits_{z \ne x} \frac{\partial P_{xz}}{\partial \kappa} & \mbox{if $x = y$.}

\end{cases}\end{split}\]

(42)\[\begin{split}\frac{\partial P_{xy}}{\partial \omega} =

\begin{cases}

0 & \mbox{if $\operatorname{A}\left(x\right) = \operatorname{A}\left(y\right)$ and $x \ne y$} \\

\frac{P_{xy}}{\omega} & \mbox{if $\operatorname{A}\left(x\right) \ne \operatorname{A}\left(y\right)$,} \\

-\sum\limits_{z \ne x} \frac{\partial P_{xz}}{\partial \omega} & \mbox{if $x = y$.}

\end{cases}\end{split}\]

The stationary state of the substitution model defined by \(P_{xy}\) is

(43)\[p_{x} = \Phi_x\]

The derivatives of the stationary state with respect to \(\kappa\) and \(\omega\) are zero as these do not affect that state, so:

(44)\[\frac{\partial p_{x}}{\partial \kappa} = \frac{\partial p_{x}}{\partial \omega} = 0\]

We calculate the codon frequencies \(\Phi_x\) from the observed nucleotide frequencies.

The original F3X4 method calculated \(\Phi_x\) directly from the empirical alignment frequencies. Specifically, let \(e^p_w\) be the empirical frequency of nucleotide \(w\) at codon position \(p\). In the original F3X4 method,

\(\Phi_x = e^1_{x_1} \times e^2_{x_2} \times e^3_{x_3}\).

This method produces biased codon frequencies because the stop codon nucleotide composition is not taken into account.

To address this issue, we follow the Corrected F3X4 (or CF3X4) method from Pond et al, PLoS One, 5:e11230.

The 12 nucleotide corrected nucleotide frequency parameters \(\phi_w^p\) are estimated from the observed nucleotide frequencies by solving a set of 12 nonlinear equations:

(45)\[\begin{split}e^1_w = \frac{\phi^1_w \times \left(1- \sum\limits_{wyz\epsilon X} \phi^2_y\times\phi^3_z\right)}{1-\sum\limits_{xyz\epsilon X} \phi^1_x\times\phi^2_y\times\phi^3_z}\\

e^2_w = \frac{\phi^2_w \times \left(1- \sum\limits_{ywz\epsilon X} \phi^1_y\times\phi^3_z\right)}{1-\sum\limits_{xyz\epsilon X} \phi^1_x\times\phi^2_y\times\phi^3_z}\\

e^3_w = \frac{\phi^3_w \times \left(1- \sum\limits_{yzw\epsilon X} \phi^1_y\times\phi^2_z\right)}{1-\sum\limits_{xyz\epsilon X} \phi^1_x\times\phi^2_y\times\phi^3_z}\\\end{split}\]

where \(X = \{TAA, TAG, TGA\}\). We use the \(\phi^p_w\) values determined in this way to compute \(\Phi_x = \frac{\phi^1_{x_1} \times \phi^2_{x_2} \times \phi^3_{x_3}}{ \sum\limits_{y} \phi^1_{y_1} \times \phi^2_{y_2} \times \phi^3_{y_3}}\) where \(y \notin X\) ranges over all stop codons.

The definitions above can be used to define a set of matrices \(\mathbf{P_r} = \left[P_{r,xy}\right]\) that give the rate of transition from codon \(x\) to \(y\) at site \(r\). A key computation is to compute the probability of a transition in some amount of elapsed time \(\mu t\). These probabilities are given by

(46)\[\mathbf{M_r}\left(\mu t\right) = e^{\mu t\mathbf{P_r}}.\]

In this section, we deal with how to compute \(\mathbf{M_r}\left(\mu t\right)\) and its derivatives.

Because \(\mathbf{P_r}\) is reversible with stationary state given by the vector \(\mathbf{p_r} = \left[p_{r,x}\right]\), then as described by Bryant, Galtier, and Poursat (2005), the matrix \(\left[\operatorname{diag}\left(\mathbf{p_r}\right)\right]^{\frac{1}{2}} \mathbf{P_r} \left[\operatorname{diag}\left(\mathbf{p_r}\right)\right]^{\frac{-1}{2}}\) is symmetric.

We can use a numerical routine to compute the eigenvalues and orthonormal eigenvectors.

Let \(\mathbf{D_r}\) be a diagonal matrix with elements equal to the eigenvalues, let \(\mathbf{B_r}\) be the matrix whose columns are the right orthonormal eigenvectors (in the same order as the eigenvalues), and note that \(\mathbf{B_r}^{-1} = \mathbf{B_r}^T\).

Then we have \(\left[\operatorname{diag}\left(\mathbf{p_r}\right)\right]^{\frac{1}{2}} \mathbf{P_r} \left[\operatorname{diag}\left(\mathbf{p_r}\right)\right]^{\frac{-1}{2}} = \mathbf{B_r} \mathbf{D_r} \mathbf{B_r}^T\) or equivalently

\[\mathbf{P_r} = \mathbf{A_r} \mathbf{D_r} \mathbf{A_r}^{-1}\]

where

\[\mathbf{A_r} = \left[\operatorname{diag}\left(\mathbf{p_r}\right)\right]^{\frac{-1}{2}} \mathbf{B_r}\]

and

\[\mathbf{A_r}^{-1} = \mathbf{B_r}^T \left[\operatorname{diag}\left(\mathbf{p_r}\right)\right]^{\frac{1}{2}}.\]

The matrix exponentials are then easily calculated as

\[\mathbf{M_r}\left(\mu t\right) = e^{\mu t\mathbf{P_r}} = \mathbf{A_r} e^{\mu t \mathbf{D_r}} \mathbf{A_r}^{-1}\]

We also want to calculate the derivatives of \(\mathbf{M_r}\left(\mu t\right)\) with respect to the other parameters on which \(P_{r,xy}\) depends (e.g., \(\beta\), \(\eta_i\), \(\kappa\), and \(\omega\)).

According to Kalbeisch and Lawless (1985) (see also Kenney and Gu (2012) and Bloom et al (2011)), the derivative with respect to some parameter \(z\) is given by

\[\frac{\partial \mathbf{M_r}\left(\mu t\right)}{\partial z} = \mathbf{A_r} \mathbf{V_{r,z}} \mathbf{A_r}^{-1}\]

where the elements of \(\mathbf{V_{r,z}}\) are

\[\begin{split}V_{xy}^{r,z} =

\begin{cases}

B_{xy}^{r,z} \frac{\exp\left(\mu t D^r_{xx}\right) - \exp\left(\mu t D^r_{yy}\right)}{D^r_{xx} - D^r_{yy}} & \mbox{if $x \ne y$ and $D_{xx}^r \ne D_{yy}^r$}, \\

B_{xy}^{r,z} \mu t \exp\left(\mu t D^r_{xx}\right) & \mbox{if $x \ne y$ and $D_{xx}^r = D_{yy}^r$}, \\

B_{xx}^{r,z} \mu t \exp\left(\mu t D_{xx}^r\right)& \mbox{if $x = y$},

\end{cases}\end{split}\]

where \(D^r_{xx}\) and \(D^r_{yy}\) are the diagonal elements of \(\mathbf{D_r}\), and \(B_{xy}^{r,z}\) are the elements of the matrix \(\mathbf{B_{r,z}}\) defined by

\[\mathbf{B_{r,z}} = \mathbf{A_r}^{-1} \frac{\partial \mathbf{P_r}}{\partial z} \mathbf{A_r}.\]

The aforementioned section defines the substitution probabilities in terms of \(\mu t\) (e.g., Eq. (46)).

Here \(\mu\) is a substitution rate, and \(t\) is the branch length.

If we are freely optimizing all branch lengths, then we just set \(\mu = 1\) so that \(\mu t = t\), and then \(\mu\) effectively drops out.

However, if we have fixed the branch lengths are not optimizing them, then we might want to include a parameter \(\mu\) that effectively re-scales all the fixed branch lengths by a constant.

In this case, \(\mu\) also becomes a free parameter of the model, and we want to compute the derivative of \(\mathbf{M_r}\left(\mu t\right)\) with respect to \(\mu\). This is straightforward:

\[\frac{\partial \mathbf{M_r}\left(\mu t\right)}{\partial \mu} = t \mathbf{P_r} e^{\mu t \mathbf{P_r}} = t \mathbf{P_r} \mathbf{M_r}\left(\mu t\right).\]

Above we describe computing the transition probabilities as a function of branch length.

Here we consider how to use those computations to compute the actual likelihoods on a tree.

We begin by computing the likelihood of the alignment at a specific site.

Let \(\mathcal{S}_r\) denote the set of aligned codons at site \(r\), let \(\mathcal{T}\) by the phylogenetic tree with branch lengths specified, and let \(\mathbf{P_r}\) be the transition matrix at site \(r\) defined above.

Then the likelihood at site \(r\) is \(\Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right)\).

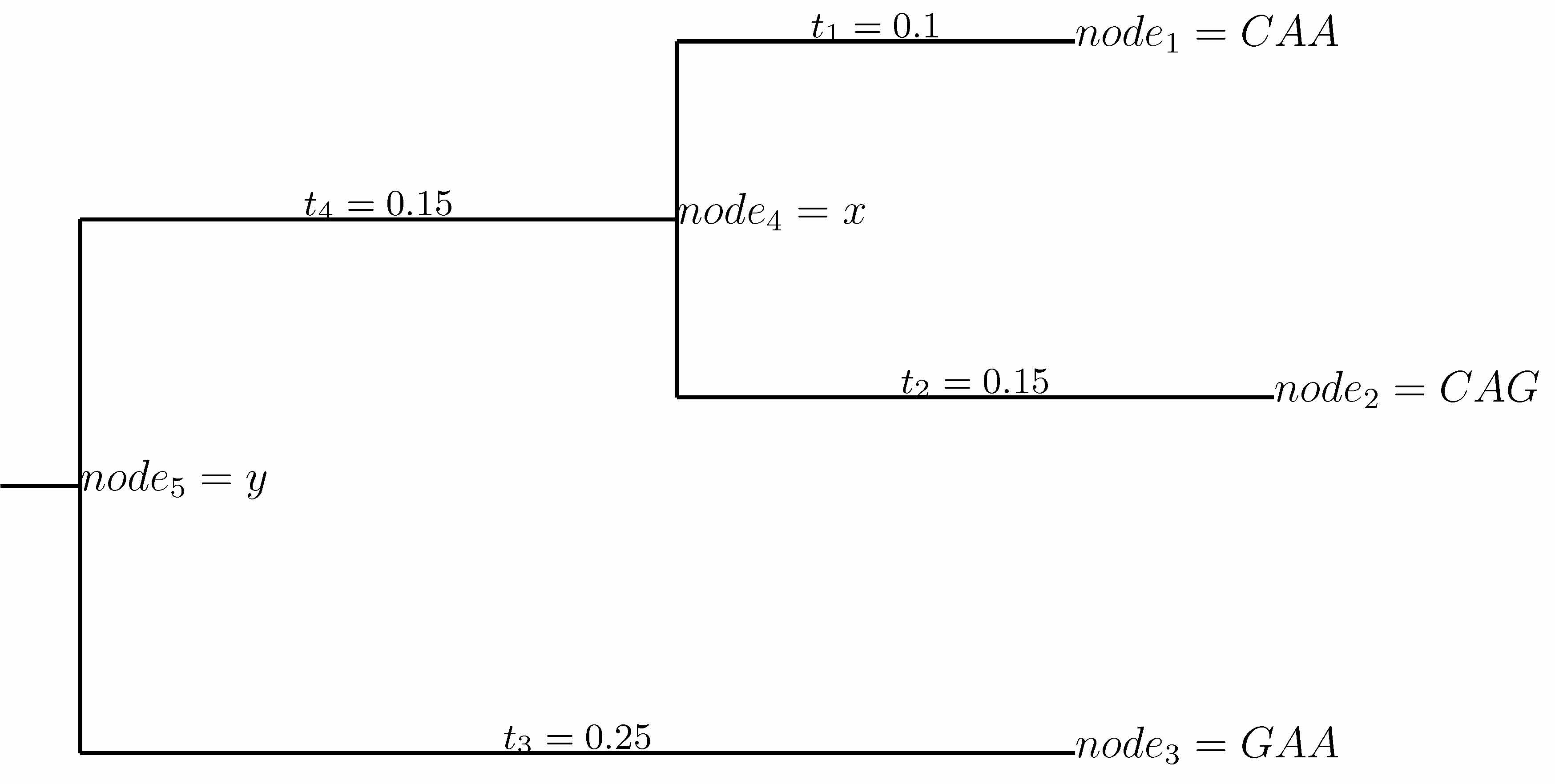

For the example tree above, we can use the pruning algorithm (Felsenstein, J Mol Evol, 1981) to write

\[\Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right)

=

\sum_y p_{r,y} M_{r,yGAA}\left(t_3\right) \left[\sum_x M_{r,yx}\left(t_4\right) M_{r,xCAA}\left(t_1\right) M_{r,xCAG}\left(t_2\right)\right].\]

Let \(n\) denote a node on a tree, let \(t_n\) denote the length of the branch leading to node \(n\), and let \(\mathcal{d}_1\left(n\right)\) and \(\mathcal{d}_1\left(n\right)\) denote the right and left descendents of node \(n\) for all non-terminal nodes.

Then define the partial conditional likelihood of the subtree rooted at \(n\) as:

\[\begin{split}L_{r,n}\left(x\right) =

\begin{cases}

\delta_{x\mathcal{S}_{r,n}} & \mbox{if $n$ is a tip node with codon $\mathcal{S}_{r,n}$ at site $r$,} \\

1 & \mbox{if $n$ is a tip node with a gap at site $r$,} \\

\left[\sum_y M_{r,xy}\left(t_{\mathcal{d}_1\left(n\right)}\right) L_{r, \mathcal{d}_1\left(n\right)}\left(y\right)\right] \left[\sum_y M_{r,xy}\left(t_{\mathcal{d}_2\left(n\right)}\right) L_{r, \mathcal{d}_2\left(n\right)}\left(y\right)\right] & \mbox{otherwise.}

\end{cases}\end{split}\]

where \(\delta_{xy}\) is the Kronecker delta.

So for instance in the example tree above, \(L_{r,n_4}\left(x\right) = M_{r,xCAA}\left(t_1\right) M_{r,xCAG}\left(t_2\right)\), and \(L_{r,n_5}\left(y\right) = M_{r,yGAA} \sum_x M_{r,yx}\left(t_4\right) L_{r,n_4}\left(x\right)\).

Using this definition, we have

\[\Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right)

= \sum_x p_{r,x} L_{r,n_{\rm{root}}}\left(x\right)\]

where \(n_{\rm{root}}\) is the root node of tree \(\mathcal{T}\); \(n_{\rm{root}} = n_5\) in the example tree above.

In practice, we usually work with the log likelihoods (always using natural logarithms).

The total likelihood is the sum of the log likelihoods for each site:

\[\ln \left[ \Pr\left(\mathcal{S} \mid \mathcal{T}, \left\{\mathbf{P_r}\right\}\right) \right] = \sum_r \ln \left[\Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right) \right].\]

We next consider how to compute the derivatives with respect to some model parameter.

Let \(\alpha\) denote the model parameter in question, and assume that we have already determined \(\frac{M_{r,xy}\left(t\right)}{\partial \alpha}\).

By the chain rule, we have

\[\begin{split}\frac{\partial L_{r,n}\left(x\right)}{\partial \alpha} =

\begin{cases}

0 & \mbox{if $n$ is a tip node,}, \\

\\

\left[\sum_y \frac{\partial M_{r,xy}\left(t_{\mathcal{d}_1\left(n\right)}\right)}{\partial \alpha} L_{r, \mathcal{d}_1\left(n\right)}\left(y\right) + M_{r,xy}\left(t_{\mathcal{d}_1\left(n\right)}\right) \frac{\partial L_{r, \mathcal{d}_1\left(n\right)}\left(y\right)}{\partial \alpha}\right]

\left[\sum_y M_{r,xy}\left(t_{\mathcal{d}_2\left(n\right)}\right) L_{r, \mathcal{d}_2\left(n\right)}\left(y\right)\right] & \mbox{otherwise.} \\

+ \left[\sum_y M_{r,xy}\left(t_{\mathcal{d}_1\left(n\right)}\right) L_{r, \mathcal{d}_1\left(n\right)}\left(y\right)\right]

\left[\sum_y \frac{\partial M_{r,xy}\left(t_{\mathcal{d}_2\left(n\right)}\right)}{\partial \alpha} L_{r, \mathcal{d}_2\left(n\right)}\left(y\right) + M_{r,xy}\left(t_{\mathcal{d}_2\left(n\right)}\right) \frac{\partial L_{r, \mathcal{d}_2\left(n\right)}\left(y\right)}{\partial \alpha}\right] & \\

\end{cases}\end{split}\]

The derivative of the likelihood at the site is then

\[\frac{\partial \Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right)}{\partial \alpha}

= \sum_x \left(\frac{\partial p_{r,x}}{\partial \alpha} L_{r,n_{\rm{root}}}\left(x\right) + p_{r,x} \frac{\partial L_{r,n_{\rm{root}}}\left(x\right)}{\partial \alpha}\right)\]

and the derivative of the log likelihood at the site is

\[\frac{\partial \ln\left[\Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right)\right]}{\partial \alpha}

= \frac{\sum_x \left(\frac{\partial p_{r,x}}{\partial \alpha} L_{r,n_{\rm{root}}}\left(x\right) + p_{r,x} \frac{\partial L_{r,n_{\rm{root}}}\left(x\right)}{\partial \alpha}\right)}

{\Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right)}.\]

The derivative of the overall log likelihood is

\[\frac{\partial \ln \left[ \Pr\left(\mathcal{S} \mid \mathcal{T}, \left\{\mathbf{P_r}\right\}\right) \right]}{\partial \alpha}

= \sum_r \frac{\partial \ln \left[\Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right) \right]}{\partial \alpha}.\]

For larger trees, there can be numerical underflow due to multiplication of lots of small numbers when computing the likelihoods. This issue, and how it can be solved by re-scaling the likelihoods during the calculation, is discussed on page 426 of Yang, J Mol Evol, 51:423-432.

Let \(L_{r,n}\left(x\right)\) be the partial conditional likelihood at node \(n\) of codon \(x\) at site \(r\) as defined above. These partial conditional likelihoods can get very small as we move up the tree towards the root, as they are recursively defined as the products of very small numbers. For the scaling to avoid underflow, we define the scaled partial condition likelilhood as

\[\tilde{L}_{r,n}\left(x\right) = \frac{L_{r,n}\left(x\right)}{U_{r,n} \times \prod\limits_{k < n} U_{r,k}}\]

where we use \(k < n\) to indicate all nodes \(k\) that are descendants of \(n\), and where

\[\begin{split}U_{r,n} =

\begin{cases}

1 & \mbox{if $n$ is divisible by $K$,} \\

\max_x\left[L_{r,n}\left(x\right) \times \prod\limits_{k < n} U_{r,k}\right] & \mbox{otherwise}

\end{cases}\end{split}\]

where \(K\) is the frequency with which we re-scale the likelihoods. A reasonable value of \(K\) might be 5 or 10. Effectively, this means that every \(K\) nodes we are re-scaling so that the largest partial conditional likelihood is one.

With this re-scaling, the total likelihood at site \(r\) is then

\[\Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right)

= \left(\sum\limits_x p_{r,x} \tilde{L}_{r,n_{\rm{root}}}\left(x\right)\right) \times

\left(\prod\limits_n U_{r,n}\right)\]

and the total log likelihood at site \(r\) is

\[\ln\left[\Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right)\right]

= \ln\left(\sum\limits_x p_{r,x} \tilde{L}_{r,n_{\rm{root}}}\left(x\right)\right) +

\sum\limits_n \ln\left(U_{r,n}\right).\]

The derivative is then

\[\begin{split}\frac{\partial \ln\left[\Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right)\right]}{\partial \alpha} &=&

\frac{\frac{\partial}{\partial \alpha}\left[ \left(\sum\limits_x p_{r,x} \tilde{L}_{r,n_{\rm{root}}}\left(x\right)\right) \times \left(\prod\limits_n U_{r,n}\right) \right]}{\left(\sum\limits_x p_{r,x} \tilde{L}_{r,n_{\rm{root}}}\left(x\right)\right) \times \left(\prod\limits_n U_{r,n}\right)} \\

&=&

\frac{\left(\sum\limits_x \left[\frac{\partial p_{r,x}}{\partial \alpha}

\tilde{L}_{r,n_{\rm{root}}}\left(x\right) + p_{r,x}\frac{\partial \tilde{L}_{r,n_{\rm{root}}}\left(x\right)}{\partial \alpha}\right]\right) \times \left(\prod\limits_n U_{r,n}\right) + \left(\sum\limits_x p_{r,x} \tilde{L}_{r,n_{\rm{root}}}\left(x\right)\right) \times \frac{\partial \left(\prod\limits_n U_{r,n}\right)}{\partial \alpha}}

{\left(\sum\limits_x p_{r,x} \tilde{L}_{r,n_{\rm{root}}}\left(x\right)\right) \times \left(\prod\limits_n U_{r,n}\right)} \\

&=&

\frac{\sum_x \left(\frac{\partial p_{r,x}}{\partial \alpha} \tilde{L}_{r,n_{\rm{root}}}\left(x\right) + p_{r,x} \frac{\partial \tilde{L}_{r,n_{\rm{root}}}\left(x\right)}{\partial \alpha}\right)}{\sum\limits_x p_{r,x} \tilde{L}_{r,n_{\rm{root}}}\left(x\right)} + \frac{\frac{\partial \left(\prod\limits_n U_{r,n}\right)}{\partial \alpha}}{\prod\limits_n U_{r,n}}.\end{split}\]

For reasons that are not immediately obvious to me but are clearly verified by numerical testing, this last term of \(\frac{\frac{\partial \left(\prod\limits_n U_{r,n}\right)}{\partial \alpha}}{\prod\limits_n U_{r,n}}\) is zero, and so

\[\frac{\partial \ln\left[\Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right)\right]}{\partial \alpha} =

\frac{\sum_x \left(\frac{\partial p_{r,x}}{\partial \alpha} \tilde{L}_{r,n_{\rm{root}}}\left(x\right) + p_{r,x} \frac{\partial \tilde{L}_{r,n_{\rm{root}}}\left(x\right)}{\partial \alpha}\right)}{\sum\limits_x p_{r,x} \tilde{L}_{r,n_{\rm{root}}}\left(x\right)}.\]

In practice, we work with the \(\tilde{L}_{r,n}\left(x\right)\) values, and then apply the correction of adding \(\sum_n \ln\left(U_r,n\right)\) at the end.

When we optimize with the \(P_{r,xy}\) substitution matrices described above, the resulting branch lengths are not in units of substitutions per site. Therefore, for tree input / output, we re-scale the branch lengths so that they are in units of substitution per site.

In a single unit of time, the probability that if site \(r\) is initially \(x\), then it will undergo a substitution to some other codon \(y\) is \(\sum_{y \ne x} P_{r,xy} = -P_{r,xx}\). Since the equilibrium probability that site \(r\) is \(x\) is \(p_{r,x}\), then the probability that site \(r\) undergoes a substitution in a unit of time is \(-\mu \sum_x p_{r,x} P_{r,xx}\). So averaging over all \(L\) sites, the probability that the average site will undergo a substitution in a unit of time is \(-\frac{\mu}{L} \sum_{r=1}^L \sum_x p_{r,x} P_{r,xx}\).

Therefore, if we optimize the branch lengths \(t_b\) and the model parameters in \(P_{r,xy}\), and then at the end re-scale the branch lengths to \(t_b' = t_b \times \frac{-\mu}{L} \sum_{r=1}^L \sum_x p_{r,x} P_{r,xx}\) then the re-scaled branch lengths \(t_b\) are in units of substitutions per sites. Therefore, for input and output to phydms, we assume that input branch lengths are already in units of substitutions per site, and scale them from \(t_b'\) to \(t_b\). Optimization is performed on \(t_b\), and then for output we re-scale the optimized branch lengths from \(t_b\) to \(t_b'\).

The models described above fit a single value to each model parameter.

We can also fit a distribution of values across sites for one model parameter \(\lambda\).

For instance, when \(\lambda\) is the \(\omega\) of the YNGKP models, we get the YNGKP_M5 model described in Yang, Nielsen, Goldman, and Krabbe Pederson, Genetics, 155:431-449.

Specifically, let the \(\lambda\) values be drawn from \(K\) discrete categories with lambda values \(\lambda_0, \lambda_2, \ldots, \lambda_{K-1}\), and give equal weight to each category. Then the overall likelihood at site \(r\) is

\[\Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right) =

\frac{1}{K} \sum_{k=0}^{K-1} \Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}_{\lambda = \lambda_k}\right)\]

and the derivative with respect to any non-\(\lambda\) model parameter \(\alpha\) is simply

\[\frac{\partial \Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right)}{\partial \alpha} =

\frac{1}{K} \sum_{k=0}^{K-1} \frac{\partial \Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}_{\lambda = \lambda_k}\right)}{\partial \alpha}.\]

The different \(\lambda_k\) values are drawn from the means of a gamma-distribution discretized into \(K\) categories as described by Yang, J Mol Evol, 39:306-314. Specifically, this gamma distribution is described by a shape parameter \(\alpha_{\lambda}\) and an inverse scale parameter \(\beta_{\lambda}\) such that the probability density function of a continuous \(\lambda\) is given by

\[g\left(\lambda; \alpha_{\lambda}, \beta_{\lambda}\right) = \frac{\left(\beta_{\alpha}\right)^{\alpha_{\lambda}} e^{-\beta_{\lambda} \lambda} \lambda^{\alpha_{\lambda} - 1}}{\Gamma\left(\alpha_{\lambda}\right)}.\]

This function can be evaluated by scipy.stats.gamma.pdf(lambda, alpha_lambda, scale=1.0 / beta_lambda). Note also that the mean of this distribution is \(\frac{\alpha_{\lambda}}{\beta_{\lambda}}\) and the variance is \(\frac{\alpha_{\lambda}}{\left(\beta_{\lambda}\right)^2}\).

The lower and upper boundaries of the interval for each category \(k\) are

\[\begin{split}\lambda_{k,\rm{lower}} &=& Q_{\Gamma}\left(\frac{k}{K}; \alpha_{\lambda}, \beta_{\lambda}\right) \\

\lambda_{k,\rm{upper}} &=& Q_{\Gamma}\left(\frac{k + 1}{K}; \alpha_{\lambda}, \beta_{\lambda}\right)\end{split}\]

where \(Q_{\Gamma}\) is the quantile function (or percent-point function) of the gamma distribution. This function can be evaluated by scipy.stats.gamma.ppf(k / K, alpha_lambda, scale=1.0 / beta_lambda).

The mean for each category \(k\) is

\[\lambda_k = \frac{\alpha_{\lambda}K}{\beta_{\lambda}} \left[\gamma\left(\lambda_{k,\rm{upper}} \beta_{\lambda}, \alpha_{\lambda} + 1\right) - \gamma\left( \lambda_{k,\rm{lower}} \beta_{\lambda}, \alpha_{\lambda} + 1 \right)\right]\]

where \(\gamma\) is the lower-incomplete gamma function and can be evaluated by scipy.special.gammainc(alpha_lambda + 1, omega_k_upper * beta_lambda).

Note that \(\lambda_k\) is not actually a free parameter, as it is determined by \(\alpha_{\lambda}\) and \(\beta_{\lambda}\).

The derivative of the log likelihood at site \(r\) with respect to these parameters is simply

\[\begin{split}\frac{\partial \Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right)}{\partial \alpha_{\lambda}} &=&

\frac{1}{K} \sum\limits_{k=0}^{K-1} \frac{\partial \lambda_k}{\partial \alpha_{\lambda}}\frac{\partial \Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}_{\lambda = \lambda_k}\right)}{\partial \lambda_k} \\

\frac{\partial \Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right)}{\partial \beta_{\lambda}} &=&

\frac{1}{K} \sum\limits_{k=0}^{K-1} \frac{\partial \lambda_k}{\partial \beta_{\lambda}}\frac{\partial \Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}_{\lambda = \lambda_k}\right)}{\partial \lambda_k}.\end{split}\]

The derivatives \(\frac{\partial \lambda_k}{\partial \alpha_{\lambda}}\) and \(\frac{\partial \lambda_k}{\partial \beta_{\lambda}}\) are computed numerically using the finite-difference method.

The section above describes how to compute the derivatives with respect to parameters (e.g., model parameters) that affect all parts of the tree.

In many cases, we may want to optimize individual branch lengths rather than the overall mutation rate \(\mu\).

In this case, we need to compute the derivatives with respect to the branch lengths.

This is somewhat simpler for each individual branch length, since each individual branch length only affects part of the tree.

Specifically, for each internal node \(n\),

\[\begin{split}\frac{\partial L_{r,n}\left(x\right)}{\partial t_{\mathcal{d}_1\left(n\right)}} &=& \frac{\partial}{\partial t_{\mathcal{d}_1\left(n\right)}} \left(\left[\sum_y M_{r,xy}\left(t_{\mathcal{d}_1\left(n\right)}\right) L_{r,\mathcal{d}_1\left(n\right)}\left(y\right)\right] \left[\sum_y M_{r,xy}\left(t_{\mathcal{d}_2\left(n\right)}\right) L_{r,\mathcal{d}_2\left(n\right)}\left(y\right)\right] \right) \\

&=& \left[\sum_y \frac{\partial M_{r,xy}\left(t_{\mathcal{d}_1\left(n\right)}\right)}{\partial t_{\mathcal{d}_1\left(n\right)}} L_{r,\mathcal{d}_1\left(n\right)}\left(y\right)\right] \left[\sum_y M_{r,xy}\left(t_{\mathcal{d}_2\left(n\right)}\right) L_{r,\mathcal{d}_2\left(n\right)}\left(y\right)\right]\end{split}\]

where

\[\frac{\partial M_{r,xy}\left(t\right)}{\partial t} = \mu \mathbf{P_r} e^{\mu t \mathbf{P_r}} = \mu \mathbf{P_r} \mathbf{M_r}\left(\mu t\right).\]

Therefore, for every node \(n\) with descendents \(n_1\) and \(n_2\):

\[\begin{split}\frac{\partial L_{r,n}\left(x\right)}{\partial t_{n'}} =

\begin{cases}

0 & \mbox{if $n'$ is not a descendent of $n$} \\

\left[\sum_y \frac{\partial M_{r,xy}\left(t_{n'}\right)}{\partial t_{n'}} L_{r,n'}\left(y\right)\right] \left[\sum_y M_{r,xy}\left(t_{n_2}\right) L_{r,n_2}\left(y\right)\right] & \mbox{if $n_1$ is $n'$} \\

\left[\sum_y M_{r,xy}\left(t_{n_1}\right) \frac{\partial L_{r,n_1}\left(y\right)}{\partial t_{n'}}\right] \left[\sum_y M_{r,xy}\left(t_{n_2}\right) L_{r,n_2}\left(y\right)\right] & \mbox{if $n'$ is descendent of $n_1$} \\

\end{cases}\end{split}\]

and

\[\frac{\partial \Pr\left(\mathcal{S}_r \mid \mathcal{T}, \mathbf{P_r}\right)}{\partial t_n} = \frac{\partial L_{r,n_{\rm{root}}}\left(x\right)}{\partial t_n} \times p_{r,x}.\]

The actual optimization is performed with the L-BFGS optimizer implemented in scipy as scipy.optimize.minimize(method='L-BFGS-B'). The approach is to first optimize all the model parameters along with branch-scaling parameter \(\mu\), then to optimize all the branch lengths, and to continue to repeat until any optimization step fails to lead to substantial further improvement in likelihood.

During the branch-length optimization, all branch lengths are updated simultaneously. This appears to be the minority approach in phylogenetics (most software does one branch length at a time), but reportedly some software does use simultaneous branch-length optimization (see table on page 18 here).